1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

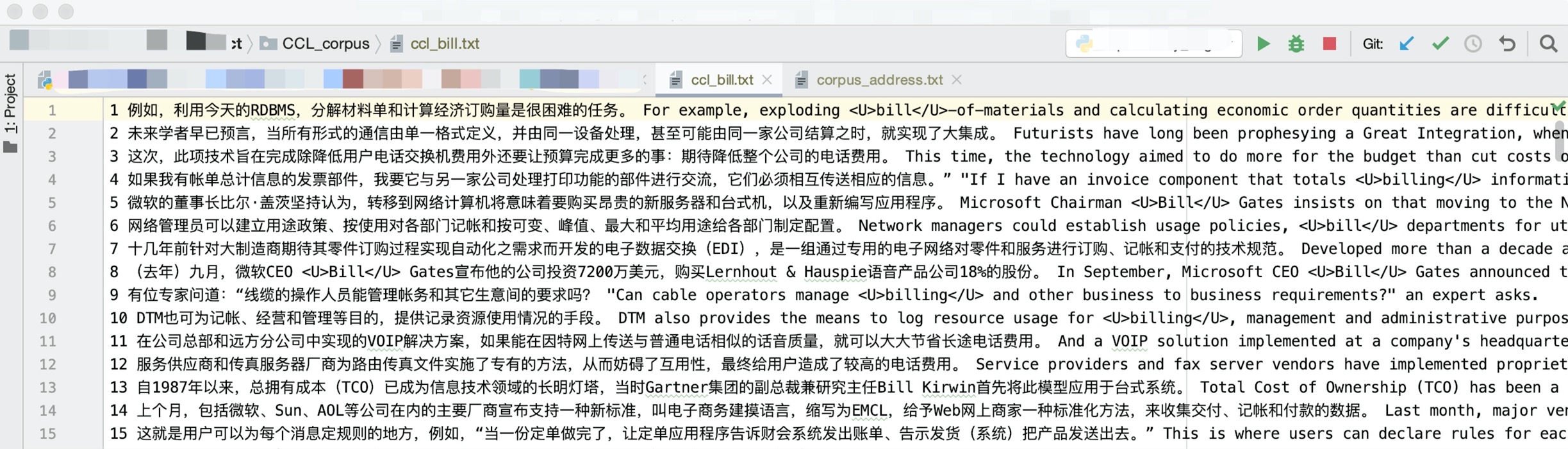

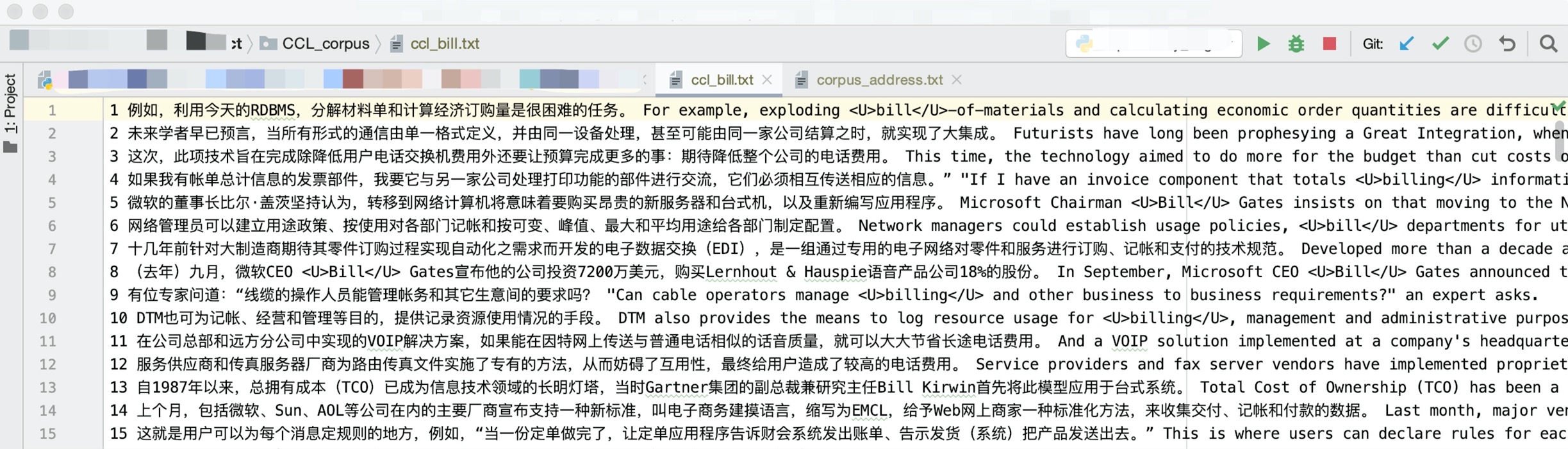

| import re

import requests

import json

import os

def extract_sentences(query:str='bill', max_left:int=300, max_right:int=300):

"""

:param query: 目标搜索词 target word

:param max_left: 左侧提取最多字数(以char为单位) maximum str length before target word

:param max_right: 右侧提取最多字数(以char为单位)minmum str length after target word

:return: None. (Generate the file containing sentences)

"""

page = 1

while True:

web = 'http://ccl.pku.edu.cn:8080/ccl_corpus/search?dir=chen&q='+query+'&LastQuery='+query+'&start='+str((page-1)*50)+'&num=50'+\

'&index=FullIndex&outputFormat=HTML&orderStyle=score&encoding=UTF-8&neighborSortLength=0&maxLeftLength='+str(max_left)+'&maxRightLength='+str(max_right)+'&isForReading=yes'

response = requests.get(web)

if response.raise_for_status():

print(response.raise_for_status())

break

url_text = response.content.decode()

se = re.search(

r'<td width=\"3%\">(\d+)<\/td><td width=\"45%\" valign=\"top\" colspan=\"3\" align=\"left\">(.+?)<\/td><td width=\"45%\" valign=\"top\" colspan=\"3\" align=\"left\">(.+?)<\/td><\/tr>',

url_text, re.S)

if not se:

break

match = re.finditer(r'<td width=\"3%\">(\d+)<\/td><td width=\"45%\" valign=\"top\" colspan=\"3\" align=\"left\">(.+?)<\/td><td width=\"45%\" valign=\"top\" colspan=\"3\" align=\"left\">(.+?)<\/td><\/tr>',

url_text, re.S)

if not os.path.isdir('../CCL_corpus'):

os.mkdir('../CCL_corpus')

with open('../CCL_corpus/ccl_'+query+'.txt','a') as f:

for m in match:

f.write(m.group(1)+' '+ re.sub(' +', ' ', re.sub('\n', ' ', m.group(2)))+' '+

re.sub(' +', ' ', re.sub('\n', ' ', m.group(3)))+'\n')

page += 1

if __name__ == "__main__":

target_words_list = ['address', 'appreciate', 'beat', 'bill', 'bond', 'column', 'cover', 'deliver', 'exploit', 'figure','perspective', 'platform', 'provision', 'rest']

for t in target_words_list:

extract_sentences(t)

|